In the world of data processing, the term ETL - pipeline frequently emerges as a vital concept. ETL stands for Extract, Transform, Load, which encapsulates the processes involved in moving data from one system to another, ensuring that it is adequately prepared for analysis. Spark Big Data enables efficient ETL processes by leveraging distributed computing to handle large volumes of data quickly and effectively. The ETL process in modern data analytics frequently utilizes big data Hadoop Spark to efficiently manage and process large volumes of data.As organizations increasingly rely on data-driven decision-making, understanding ETL - pipelines becomes essential for anyone looking to dive into the realm of data analytics. In this comprehensive guide, we will explore the intricacies of ETL - pipelines, with a special focus on ETL data pipelines and how to implement them using Python.

1. What is an ETL Pipeline?

An ETL - pipeline is a series of processes that facilitate the movement of data from various sources to a destination, typically a data warehouse or a data lake. The primary goal of an ETL - pipeline is to consolidate data, making it easier to analyze and derive insights.

Key Features of an ETL - Pipeline:

- Data Integration: Combines data from multiple sources into a unified format.

- Data Preparation: Cleans, transforms, and prepares data for analysis.

- Automation: Streamlines data workflows to reduce manual intervention and minimize errors.

In essence, an ETL - pipeline allows organizations to harness their data efficiently, enabling faster decision-making and improved business intelligence.

2. Why Use an ETL - Pipeline?

Organizations leverage ETL - pipelines for various reasons, including:

- Centralized Data Management: ETL - pipelines aggregate data from disparate sources, providing a centralized view of information.

- Improved Data Quality: Through the transformation process, ETL - pipelines enhance data quality by removing duplicates, correcting errors, and standardizing formats.

- Faster Insights: Automated ETL processes speed up data collection and analysis, allowing organizations to gain insights in real-time.

- Scalability: ETL - pipelines can adapt to growing data volumes, accommodating increasing business needs without significant rework.

In summary, an ETL - pipeline is crucial for organizations seeking to maintain a competitive edge in today’s data-driven landscape.

3. Components of an ETL Pipeline

This pipeline consists of three core components: Extract, Transform, and Load. Let’s delve deeper into each of these components.

Extract

The Extract phase involves retrieving data from various sources, which can include:

- Databases (e.g., MySQL, PostgreSQL)

- APIs (e.g., RESTful APIs)

- Flat files (e.g., CSV, JSON)

- Streaming data (e.g., IoT devices)

The primary goal of this phase is to collect all relevant data required for analysis without altering its integrity. Extracting data efficiently is crucial, as it lays the foundation for the subsequent stages of the ETL - pipeline.

Transform

The Transform phase is where the real magic happens. During this stage, the extracted data is processed and transformed to meet the requirements of the destination system. Key activities in the transformation phase include:

- Data Cleaning: Removing duplicates, correcting errors, and filling in missing values.

- Data Mapping: Converting data from one format to another, ensuring compatibility with the destination system.

- Aggregation: Summarizing data to provide higher-level insights (e.g., calculating averages or totals).

- Data Enrichment: Enhancing data by adding additional context or information from other sources.

This phase is critical, as the quality of the transformation directly impacts the reliability of the insights derived from the data.

Load

The Load phase is the final step in the ETL process, where the transformed data is loaded into the target system. This can involve:

- Full Load: Loading all data into the target system, usually performed during initial migrations.

- Incremental Load: Loading only the new or updated data since the last load, which is often more efficient for ongoing operations.

The load process should ensure data integrity and accuracy in the target system, enabling analysts to work with reliable data.

4. ETL - Pipeline Process Flow

To visualize the ETL process, consider the following flow:

- Data Extraction: Data is collected from multiple sources.

- Data Transformation: The extracted data undergoes cleaning, mapping, aggregation, and enrichment.

- Data Loading: The transformed data is loaded into a target system for analysis.

- Data Analysis: Analysts use the data to generate reports, dashboards, and insights.

This cycle can be continuous, with data flowing through the ETL - pipeline regularly, ensuring that the target system remains updated with the latest information.

5. Types of ETL - Pipelines

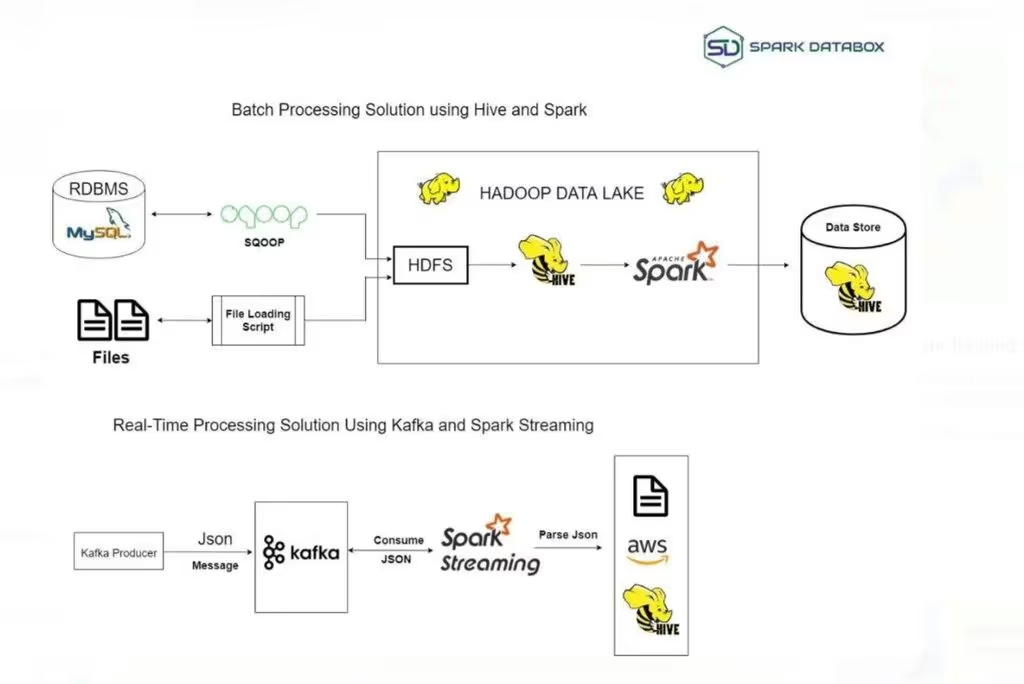

ETL - pipelines can be classified into two main types: batch ETL and real-time ETL. Each type serves specific business needs.

Batch ETL

Batch ETL processes data in large chunks or batches at scheduled intervals (e.g., nightly, weekly). This approach is suitable for organizations that do not require immediate insights and can afford to wait for periodic updates. Benefits of batch ETL include:

- Efficiency: Processing large volumes of data at once can be more resource-efficient.

- Simplicity: Batch processes are often easier to manage and maintain.

However, the downside is that businesses might miss real-time insights, making it less suitable for environments that demand immediate data analysis.

Real-Time ETL

Real-Time ETL processes data as it is generated, allowing for immediate insights and analysis. This type of pipeline is essential for applications that require up-to-the-minute data, such as:

- Online transaction processing

- Monitoring systems

- Fraud detection

Real-time ETL often involves more complex architectures and technologies, but the benefits include timely decision-making and improved responsiveness to changing business conditions.

6. ETL - Pipeline Tools

Several ETL tools and platforms can help organizations design and implement effective ETL - pipelines. Some popular ETL tools include:

- Apache NiFi: An open-source tool designed for data routing and transformation.

- Talend: A comprehensive data integration platform that provides various ETL solutions.

- Apache Airflow: A platform to programmatically author, schedule, and monitor workflows, often used for building ETL - pipelines.

- Microsoft SQL Server Integration Services (SSIS): A popular ETL tool for data integration within the Microsoft ecosystem.

These tools simplify the ETL process, allowing users to focus on data analysis rather than the complexities of data integration.

7. Implementing an ETL - Pipeline Using Python

Python is a versatile programming language that has become a popular choice for building ETL - pipelines. Its rich ecosystem of libraries and frameworks makes it easy to handle data extraction, transformation, and loading. Here’s a step-by-step guide to implementing an ETL - pipeline in Python:

Step 1: Set Up Your Environment

First, you’ll need to set up your Python environment. You can use virtual environments to manage dependencies efficiently. Install the necessary libraries:

bash

Copy code

pip install pandas sqlalchemy requests

Step 2: Extract Data

In this example, we will extract data from a CSV file. The pandas library simplifies this process.

python

Copy code

import pandas as pd

def extract_data(file_path):

return pd.read_csv(file_path)

data = extract_data('data/source_data.csv')

Step 3: Transform Data

Next, we will perform some transformations on the data, such as cleaning and mapping.

python

Copy code

def transform_data(data):

# Remove duplicates

data = data.drop_duplicates()

# Fill missing values

data.fillna(0, inplace=True)

# Rename columns

data.rename(columns={'old_name': 'new_name'}, inplace=True)

return data

transformed_data = transform_data(data)

Step 4: Load Data

Finally, we will load the transformed data into a database. In this example, we will use SQLAlchemy to connect to a PostgreSQL database.

python

Copy code

from sqlalchemy import create_engine

def load_data(data, db_url):

engine = create_engine(db_url)

data.to_sql('target_table', con=engine, if_exists='replace', index=False)

load_data(transformed_data, 'postgresql://user:password@localhost:5432/mydatabase')

Step 5: Automate the ETL - Pipeline

To automate the ETL - pipeline, you can schedule the script to run at specific intervals using cron jobs or task scheduling libraries like APScheduler.

python

Copy code

from apscheduler.schedulers.blocking import BlockingScheduler

scheduler = BlockingScheduler()

scheduler.add_job(run_etl_pipeline, 'interval', hours=1)

scheduler.start()

This basic implementation demonstrates how Python can be used to create a simple ETL - pipeline. More complex ETL - pipelines can incorporate additional libraries and frameworks, such as Dask for distributed processing or Luigi for workflow management.

8. Best Practices for ETL - Pipelines

To ensure the effectiveness and reliability of your ETL - pipelines, consider the following best practices:

- Data Validation: Implement checks to ensure the quality and accuracy of the data being processed.

- Logging and Monitoring: Maintain logs of ETL processes and set up monitoring to detect and resolve issues promptly.

- Scalability: Design ETL - pipelines to scale easily as data volumes grow, using cloud services if necessary.

- Documentation: Keep comprehensive documentation of your ETL processes, including data sources, transformations, and workflows.

- Regular Updates: Periodically review and update ETL processes to adapt to changing business needs and data sources.

By following these best practices, you can create robust ETL - pipelines that support your organization’s data needs.

Conclusion

In today’s data-centric world, understanding ETL - pipelines is essential for anyone looking to harness the power of data. ETL - pipelines facilitate the extraction, transformation, and loading of data, enabling organizations to gain valuable insights for better decision-making.The ETL process in Spark Big Data allows organizations to streamline data extraction, transformation, and loading, making it easier to analyze vast datasets in real time.

By leveraging tools and programming languages like Python, businesses can efficiently implement ETL data pipelines tailored to their specific requirements. As data continues to grow in volume and complexity, mastering ETL processes will be a critical skill for data professionals.

In summary, whether you’re a beginner or a seasoned professional, a solid grasp of ETL - pipelines will undoubtedly enhance your ability to navigate the exciting field of data analytics. Embrace the journey, and let the power of data transform your organization!

Ready to transform your AI career? Join our expert-led courses at SkillCamper today and start your journey to success. Sign up now to gain in-demand skills from industry professionals.

If you're a beginner, take the first step toward mastering Python! Check out this Fullstack Generative AI course to get started with the basics and advance to complex topics at your own pace.

To stay updated with latest trends and technologies, to prepare specifically for interviews, make sure to read our detailed blogs:

How to Become a Data Analyst: A Step-by-Step Guide

How Business Intelligence Can Transform Your Business Operations

.avif)